Why you shouldn't clean your data without code

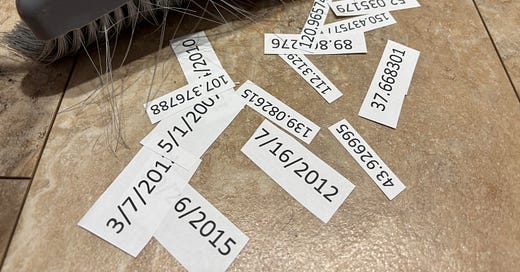

Cleaning data manually is like brushing your teeth with a q-tip. It takes longer, and you’ll miss the gunk under your gums and between your teeth. It’s not worth the effort.

Do you work for an agency or organization that adds new data to existing data files and shares files with partners as data is updated? How often have you opened a data file and been frustrated by the glaring errors in data entry?

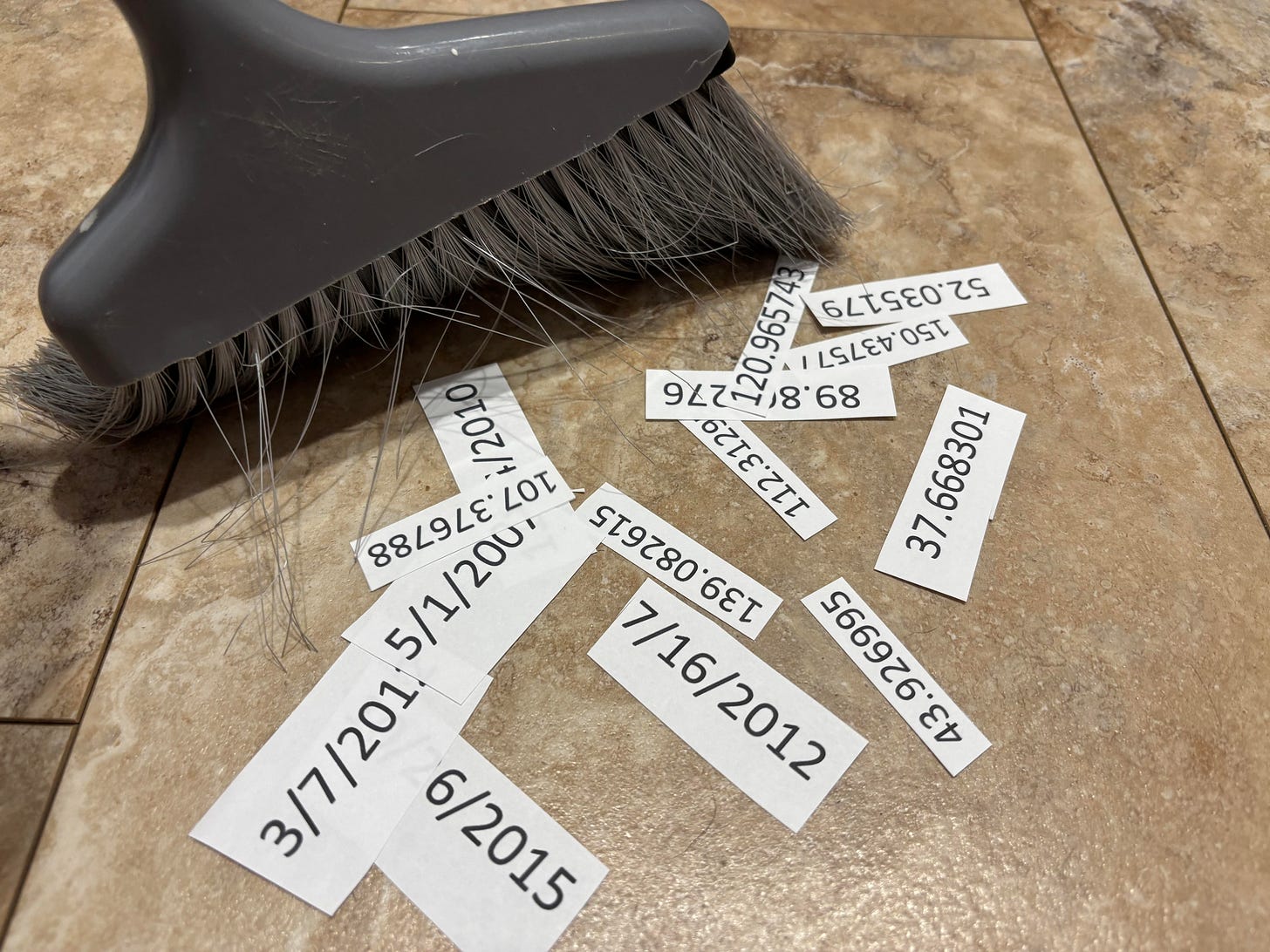

Common pitfalls with cleaning data manually:

1. It’s hard to catch all the errors.

2. You must repeat your cleaning process when new data is added to the file.

3. There’s no record of the data-cleaning steps. You manually implement each step, which is time-consuming and frustrating.

4. Data types can differ by cell rather than having one fixed data type per field (column), which can lead to problems when importing, summarizing, or analyzing the data later.

Why it’s easier to clean data with code in R (or another language):

1. Code provides a repeatable process for cleaning your data. Just rerun the code on new data. It takes seconds. Okay, maybe minutes, depending on the amount of data and the complexity of your code. But it’s still fast, and you can enjoy a coffee (or chocolate!) while you wait.

2. Complex changes to the data can be completed with a few, simple lines of code.

3. Once you write some basic code, you can easily apply the same code to other data.

Wait a minute! You could learn VBA and write macros to clean your data in Excel. Maybe you already do use macros, and that’s great! But you’ll need another language, such as R, if you want to run complex statistical analysis on your data without writing the mathematical formulas for models in Excel. And if you want to create new fields (summarize the data in some form or join the data to data in other tables), you’ll have a much easier time doing so in R.

I assume you want clean data. If not, power on with whatever your current process may be! I wish you the best.